Evaluating Digital Products with User Research and Usability Testing

A key component of user centred design is the evaluation of digital products with real users, recruited from the target demographic. There are a variety of methods and approaches that can be used depending on the specific goals of the project, the stage of development and complexity.

There is a very strong business case for carrying out usability testing to evaluate products as they are being developed. The cost of change is small and the number of design alternatives is high and so prototype user research forms a key part of the development process.

Attributes of User Research

There are many different ways of delivering user testing and all contain the following fundamental attributes:

- They involve users being asked to attempt tasks similar to those they would attempt in the real world

- The users are in some way representative of the client’s target audience

- The users interact with the prototype as naturally as possible and provide feedback, generally by using a think aloud protocol

- The observations are analysed and recommendations made to iterate the prototype.

With these core elements in mind what separates the different methods are:

- Where the research takes place

- With how many users

- What is the subject of the research

- What data we want to capture

USABILITY EVALUATION

Research Process

The methodology for conducting qualitative research (usability evaluations) of this type involves the following:

- Recruitment: Participants recruited against the target profiles specified by the client

- Preparation:

- A test script and discussion guide designed to facilitate the participants interaction with the design

- A facility to carry out the research

- Equipment set up to record the sessions and devices for the participant to use (i.e. tablet, smartphone, PC)

- Research Session:

- Skilled moderation of each of the sessions

- Observation and recording of issues

- Analysis & Reporting

Participant Numbers & Session Length

By the time we get to the design testing stage we are normally carrying out the usability evaluation on all platforms – smartphone, tablet and PC. But in our experience, we don’t need to run 5 sessions on each platform because we have normally flushed out serious issues in previous rounds.

In most cases we run ten ( approx. 60 minute ) sessions over two days of research, with the following split across platforms:

- Smartphone = 4 participants

- PC = 4 participants

- Tablet = 4 participants

We generally find that tablet can be informed by the findings on smartphone and PC but we like to run a couple of sessions to avoid us missing an obvious issue with break points and key information below the fold

Research Facility and Viewing

Research facilities provide viewing through a one-way mirror or via a screen so that the design team and stakeholders can see, first hand, how participants get on with the developing designs. As the people viewing are in the same location as the moderator there is the opportunity to discuss what they observe between sessions and begin to plan updates and changes.

It is also an opportunity for the design team and stakeholders to collaborate and discuss what they are seeing as research facility viewing rooms tend to be sound proof.

However, research facilities come at a cost and alternatively the research can be run almost anywhere – in meeting rooms, participants homes, clients offices etc. We can stream the sessions across the internet so live viewing remotely is possible.

However, there are limitations which are:

- Only the screen of the device is visible not the participants facial expressions

- Viewing video for extended periods over the internet can be a little unreliable due to the bandwidth heavy nature of video

- The screen resolution can make it difficult to view fine details

An alternative is to review the high definition session videos which we record as a matter of course and upload to a shared Dropbox folder. Bandwidth allowing we will upload these as we go and immediately share a link so you are able to watch with about an hours lapsed time.

Test Equipment

We provide all the technology required to run the user research sessions.

This includes;

- The test laptop which is loaded with specialist software for recording picture in picture

- High definition video of the screen the participant is using plus their facial expressions.

- The software needed to connect smartphone and tablet devices so that we can record the screen.

The setup we use for recording mobile uses a hardware and software configuration that means the smartphone simply has the power cable plugged in. The participant can pick up the phone and hold and interact with it naturally without any attachments for overhead cameras, or cradles or with the device fixed in position on the desk.

We have a range of devices we provide for the testing including iOS and Android smartphones, tablets and Microsoft PC’s. We are happy to use your devices if you need us to and can generally connect to them with ease.

Test Script, Moderation, Analysis and Reporting

All our projects are run by one of our team of highly experienced UX consultants who has been independently evaluated and award Accredited Practitioner status. Our consultants all have a minimum of 5 years experience, the necessary qualifications plus the capabilities to run this type of design research.

Test Script

In discussion with the client, our UX consultant will develop the test script that includes the tasks and scenarios that will guide the participants’ interaction, plus any questions you need answered by the research.

We document this within a Research Plan, which contains all other details concerning the research, and is shared with you for iteration and sign off. A typical session structure might look like this:

- Before they start

- Discussion about the context of use, current behaviours and attitudes towards the subject of the research.

- Tasks

- We will create tasks covering the key journeys, interactions, features and functions that are to be evaluated

- Questions connected to tasks that the moderator must ensure are answered.

- Closing Interview

- A discussion about their experience

Moderation

All our consultants have many years of experience running research of this type and, unless otherwise requested, our approach to moderation is as follows:

- To verbally communicate the tasks to participants to avoid formality

- To allow the user to interact freely with the prototype and not to lead them

- Only to interrupt the user when they are hesitant or confused and if that doesn’t result in losing the momentum of the task or potential learnings from their behaviour

- To question them after a task or sub-task if necessary

On occasion, we have been asked to operate a more formal moderation approach by other customers and are happy to alter our style if need be.

During the user testing sessions the moderator will be observing the session and making notes that they will use later for analysis. These may be time stamped notes so that they can refer to them later and review the video of the sessions.

Analysis & Reporting

After the research they will carry out the analysis and start to create the report – if that is the deliverable required. Where reporting is required we follow User Experience best practice reporting standards.

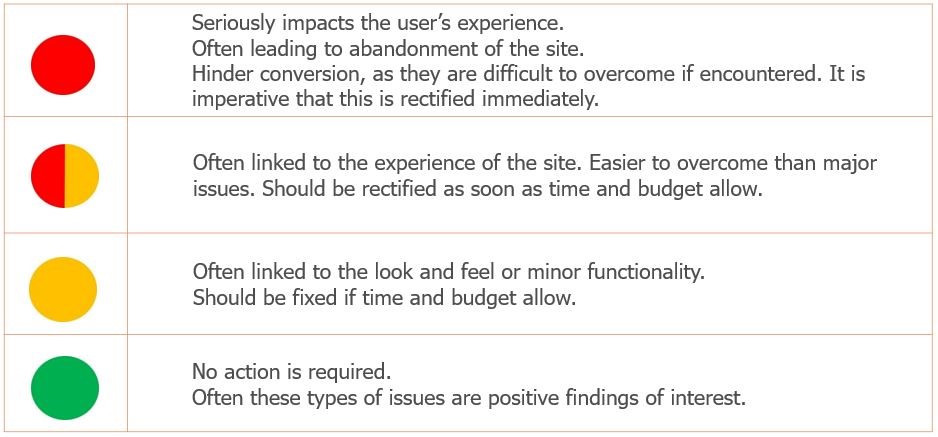

We utilise a traffic light reporting scheme to categorise observations and assign severity ratings as shown below:

Traffic Light Reporting Scheme

CASE STUDY:

In generative study for a hotel chain we reviewed an existing app ahead of the redesign process. The recruitment targeted participants from their entire user base including business and consumer users, groups and individuals. The browse and search journeys were rigorously tested but so too were the payment, account management, email messages and loyalty scheme. The study also included visit to competitor apps so that users could identify areas they preferred that could be referenced in the redesign.

This study was carried out in a research facility because the client wanted to hear the feedback first hand, but it isn’t unusual for this stage of research to take place in users homes, as we did for an insurance comparison company ahead of redesign, or in clients offices or meetings rooms. The decision generally comes down to cost, availability to spend a couple of days out of the office, and location of users. Session videos are always provided (free of charge from us) so you are still able to see what happened even if you can’t attend.

PROCESS DESIGN TESTING

Preparing for Research

The approach to process testing is very similar to that used for usability testing with the core elements present:

- The test assets

- The participants

- The location of the research

- The preparation, moderation, analysis and reporting.

The biggest differences with process testing is that the test assets have to be created and the reporting is normally in the form of revised process designs.

Process designs can be delivered to us as user journey maps, use case workflows, process flow diagrams and more. These assets explain how the process will work, the possible interactions, error paths, alternative flows, success criteria and more. But, they cannot be placed in front of users in this form as they will simply confuse them.

PROCESS CARDS:

The approach we take is to develop colour coded process cards that represent different attributes in the process.

A recent project included the following card types:

It is often the case that this stage in the process alone throws up issues and omissions from the processes that can be rectified before user testing takes place. For that reason, we recommend allowing additional time in the project for this stage to go through a few rounds of iteration.

Carrying out the Testing

If you have observed usability testing from the viewing room in a research facility you will already have a pretty good idea of what happens with process testing. Participants are recruited against the target user profile (persona) and attend a 45 to 60 minute session on their own and moderated by a senior UX consultant. After they sit down and made to feel relaxed with a few opening questions we begin the process testing.

Each set of process cards represents a user journey or sub-journey and the first card in the set is the user story. The participant is handed the user story card and asked to read it. The card may say something like:

– You want to log into your online bank account

- You search for bank.co.uk to view your account and click through to the login screen.

- You cannot remember your logon details and the website advises you to contact support

How will you proceed?

The user will have a number of choices presented on the card and these will lead to different routes through the process via associated cards.

Our goal in the research is to understand if the process supports the way the user wants to interact in a natural way. By observing their behaviour, listening to their verbalised thought processes and feedback we can refine and optimise the processes.

Sharing the Findings

The best way to share the findings from process testing is to provide revised process maps or flows as the main deliverable. A report provides the narrative as to why the process has changed or been altered in the way it has. It will take each step in each process and identify what worked and what didn’t and how it needs to change or be adapted.

Together these deliverables will inform how the wireframes or prototype should be designed to support the user journey and process.

COMPARATIVE USABILITY TESTING

COUNTERBALANCING:

To make sure that the evaluation of multiple prototypes is unbiased we use a method called counterbalancing. Rather than have each user interact with the prototypes in the same order, i.e. prototype ‘a’, prototype ‘b’, then ‘c’ and so on, we alternate the order so that every prototype is interactive with the same number of times and in the same order.

The following is a real test protocol from a project where there were three prototype versions (two only on smartphone) and on three platforms:

| Day/Participant | Desktop | Tablet | Smartphone | |||||

| Day 1 | Vs1 | Vs2 | Vs3 | Vs1 | Vs2 | Vs3 | Vs1 | Vs2 |

| 1 | 1st | 2nd | 3rd | 6th | 5th | 4th | ||

| 2 | 2nd | 3rd | 1st | 4th | 6th | 5th | ||

| 3 | 3rd | 1st | 2nd | 5th | 4th | 6th | ||

| 4 | 1st | 2nd | 3rd | 5th | 4th | |||

| 5 | 2nd | 3rd | 1st | 5th | 4th | |||

| 6 | 3rd | 1st | 2nd | 4th | 5th | |||

| Day 2 | Vs1 | Vs2 | Vs3 | Vs1 | Vs2 | Vs3 | Vs1 | Vs2 |

| 1 | 5th | 4th | 3rd | 1st | 2nd | |||

| 2 | 3rd | 5th | 4th | 2nd | 1st | |||

| 3 | 4th | 3rd | 5th | 1st | 2nd | |||

| 4 | 1st | 2nd | 3rd | 5th | 4th | 3rd | ||

| 5 | 1st | 2nd | 3rd | 5th | 4th | |||

| 6 | 5th | 4th | 3rd | 1st | 2nd | |||

Test Protocol Example

This type of testing relies on the moderator being well organised and making good notes and it can be very useful to have a note taker in addition to the moderator to keep track of the versions and order

BENCHMARKING EVALUATION

Carrying out the Evaluation

The test set up is very simple as the tasks and scenarios to be used are identical for both prototype and live. We will use a counter balancing approach to make sure that any bias is removed and the live and prototype versions are seen first and second an equal number of times.

The participant will interact with each, one after another, attempting the same task and providing verbal feedback plus observed behaviours.

The criteria against which the comparison is carried out need to be well thought through and the tasks and test protocol planned accordingly. Participants can’t always make the leap from prototype to live and so their feedback can be based on an unfair judgment.

If we have prepared well, we can ignore that and focus on the key areas such as;

- Interaction

- User Journey

- Function of features

These will be the basis for the benchmark and the scoring / reporting format we have agreed with you.

CASE STUDY:

One of our hotel clients was developing a new app and askes us to carry out a benchmarking evaluation of the developing prototype app versus the existing live app. We used counterbalancing to ensure that the 6 participants interacted with the prototype and live app in a 1:2 order equally. The deliverable included a detailed benchmark of the comparative strengths and weaknesses of live versus prototype.

Have a Project in Mind?